Notification Service With Kafka In Harris

Description

Form popularity

FAQ

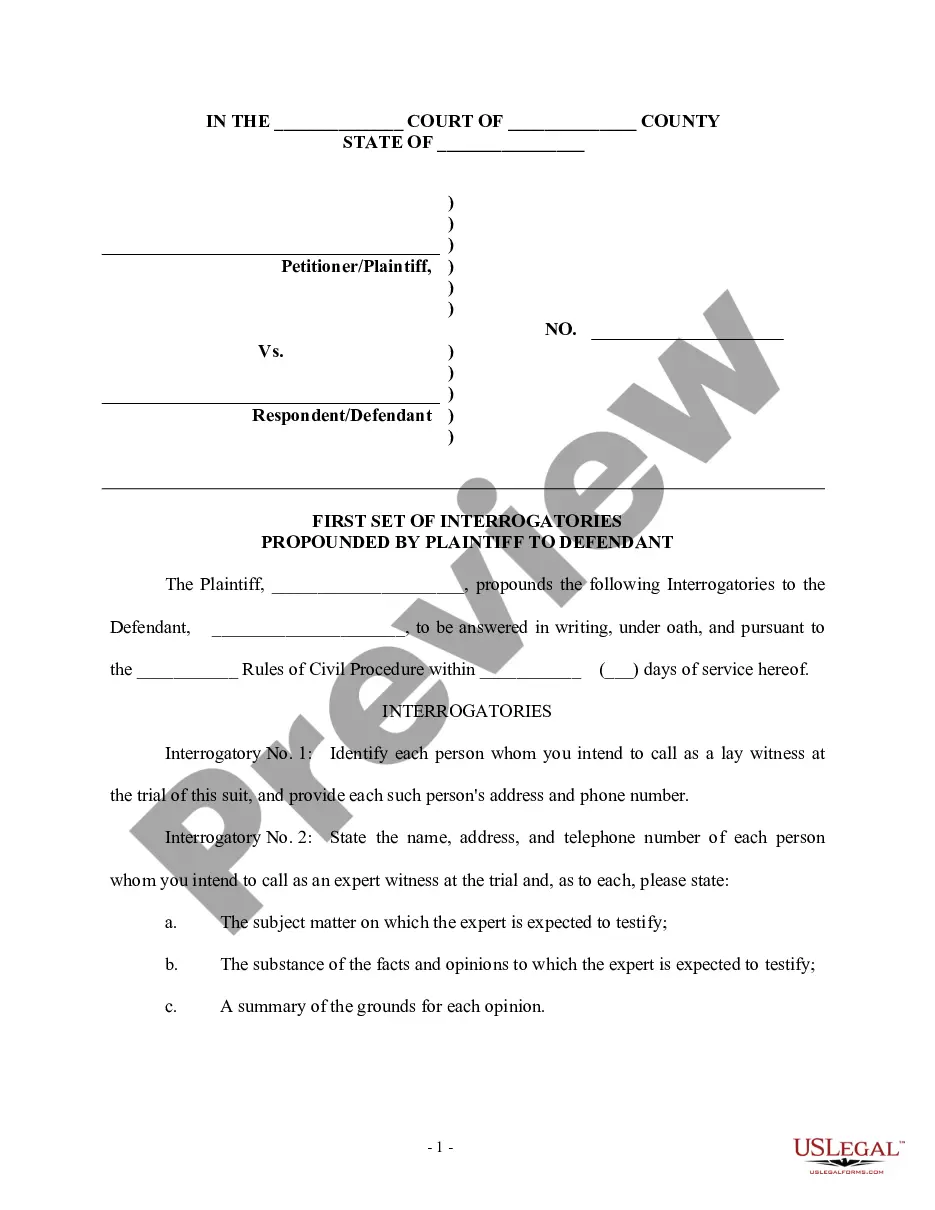

How to Produce a Message into a Kafka Topic using the CLI? Find your Kafka hostname and port e.g., localhost:9092. If Kafka v2. 5+, use the --bootstrap-server option. If older version of Kafka, use the --broker-list option. Provide the mandatory parameters: topic name. Use the kafka-console-producer.sh CLI as outlined below.

What's the difference between Kafka and Redis? Redis is an in-memory key-value data store, while Apache Kafka is a stream processing engine. However, you can compare the two technologies because you can use both to create a publish-subscribe (pub/sub) messaging system.

Pub/Sub is used for streaming analytics and data integration pipelines to load and distribute data. It's equally effective as a messaging-oriented middleware for service integration or as a queue to parallelize tasks.

Kafka and RabbitMQ are message queue systems you can use in stream processing. A data stream is high-volume, continuous, incremental data that requires high-speed processing.

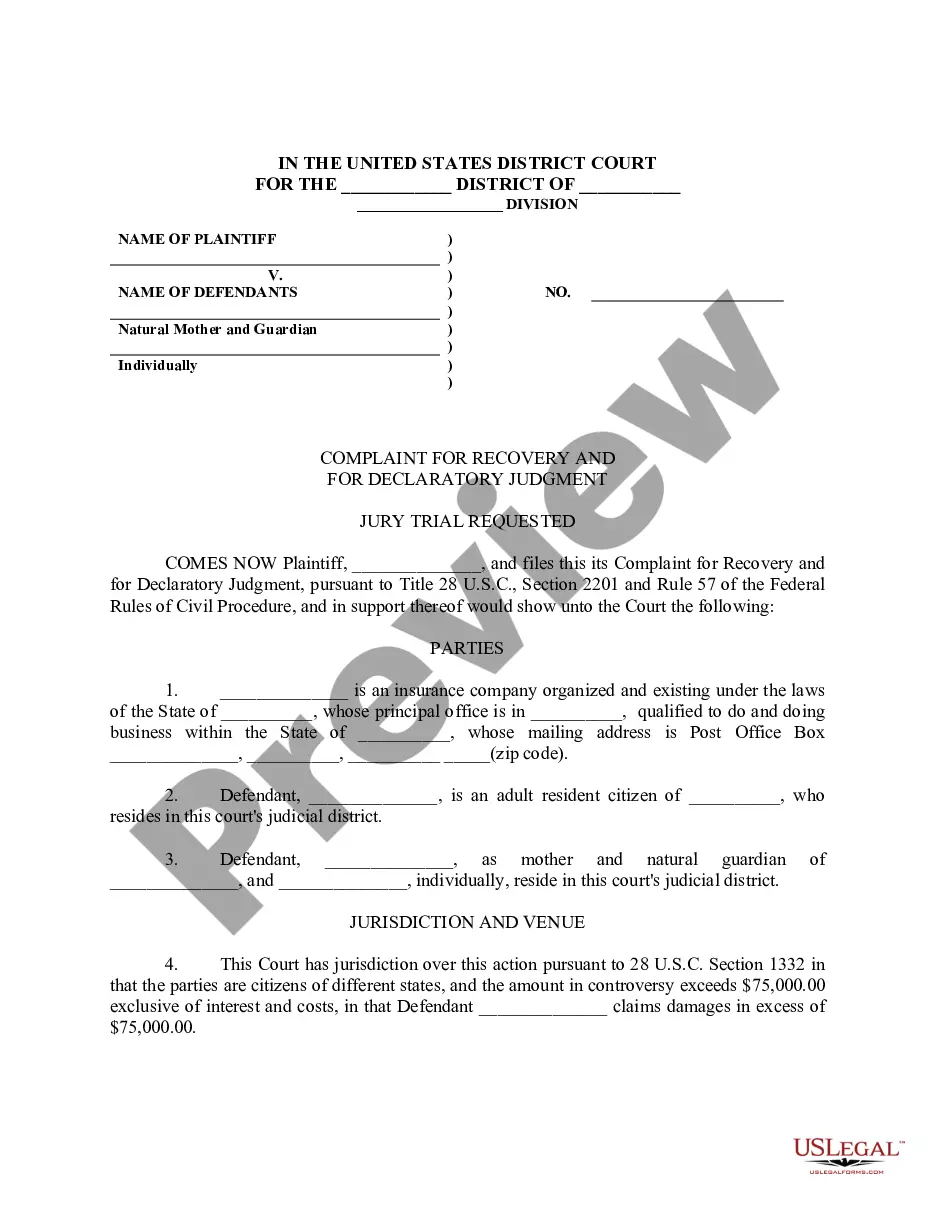

Kafka stands out for its ability to handle high throughput and provide scalability, fault tolerance, and message retention. These features make Kafka ideal for a notification system where reliability and real-time processing are crucial.

With Kafka partitions, you can process messages in order. Because there is only a single consumer within a consumer group associated with a partition, you'll process them one by one. This isn't possible with queues.

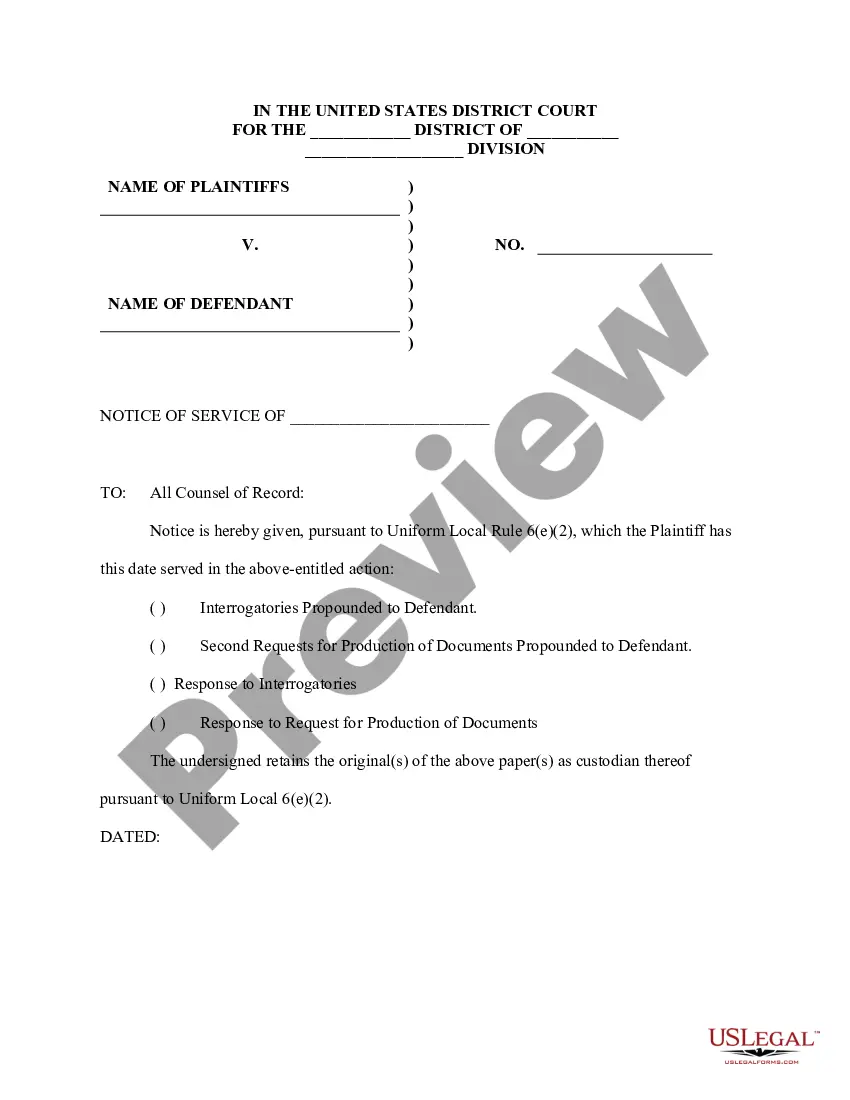

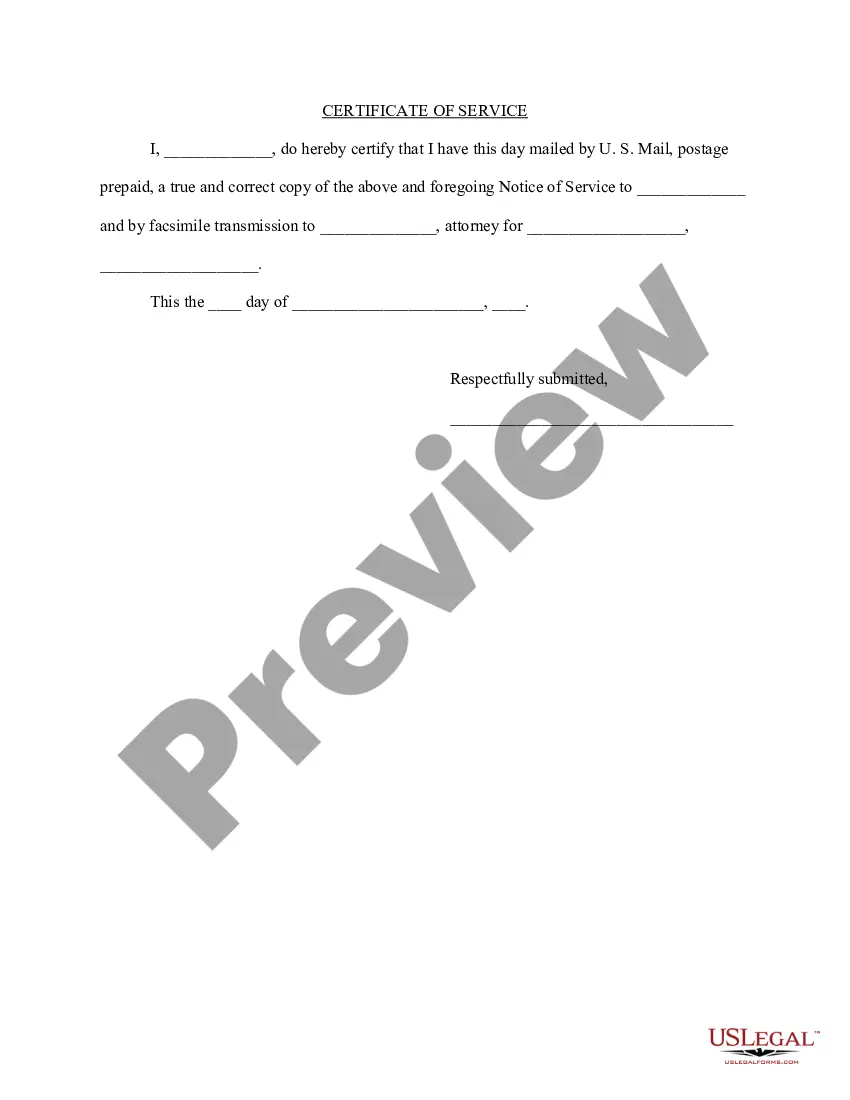

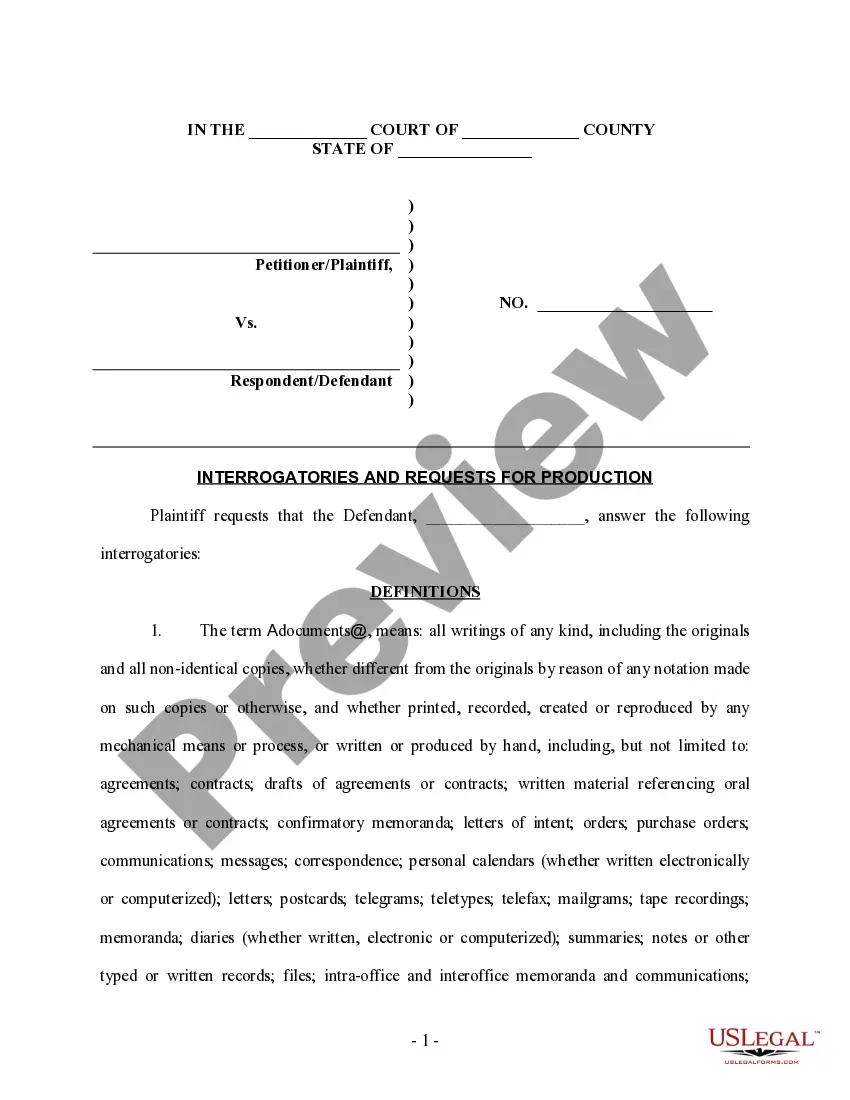

The notification email service consumes email requests from the Kafka notification topic and processes them to send them to a third-party service. Modules like PT, TL, PGR etc make use of this service to send messages through the Kafka Queue.

Architecture Overview Apache Kafka: A distributed streaming platform that handles the storage and processing of notification messages. Consumer: A Java application that consumes notification messages from Apache Kafka and sends them to the appropriate channels.

Complete the following steps to receive messages that are published on a Kafka topic: Create a message flow containing a KafkaConsumer node and an output node. Configure the KafkaConsumer node by setting the following properties: On the Basic tab, set the following properties: