Finding Fact Samples For Gradient Boosted Decision Trees

Description

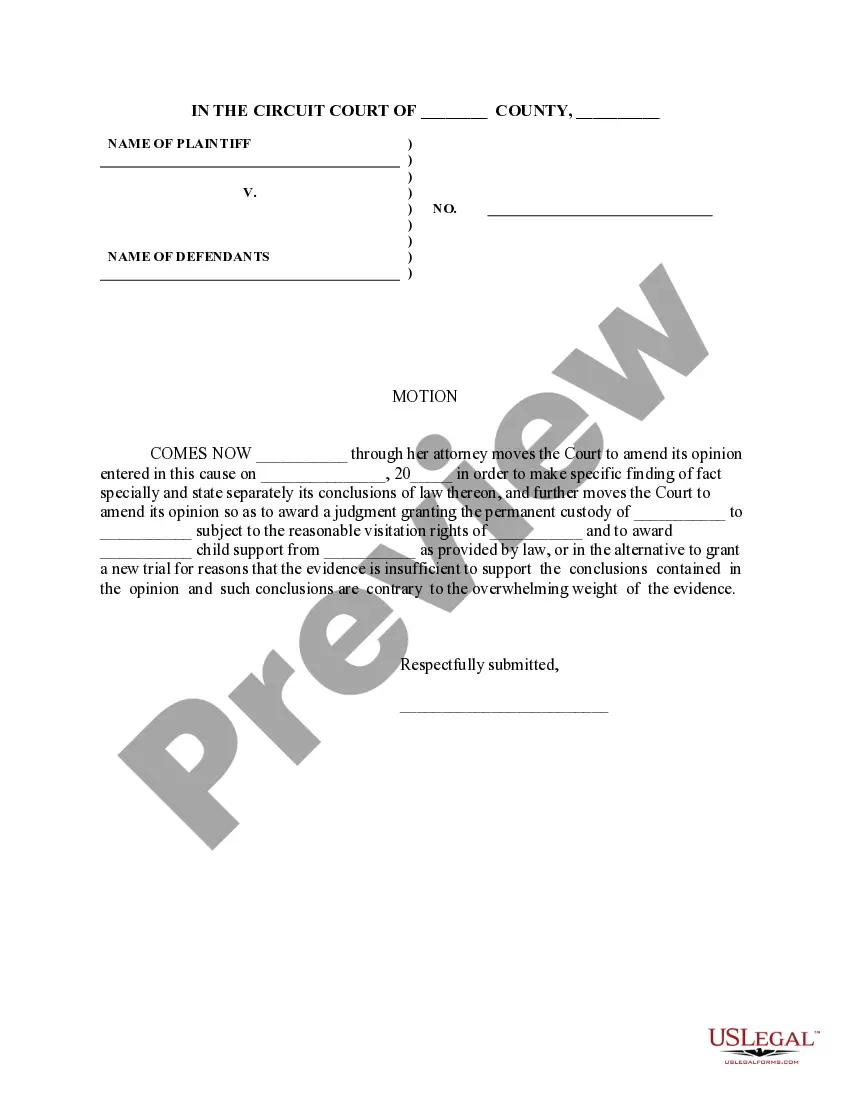

How to fill out Motion To Make Specific Findings Of Fact And State Conclusions Of Law - Domestic Relations?

Creating legal documents from the ground up can frequently be intimidating.

Some situations may require extensive research and significant financial investment.

If you’re looking for a simpler and more economical method of preparing Finding Fact Samples For Gradient Boosted Decision Trees or any other documents without unnecessary difficulties, US Legal Forms is readily available for you.

Our online repository of over 85,000 current legal forms encompasses nearly every facet of your financial, legal, and personal affairs. With just a few clicks, you can quickly access state- and county-specific forms meticulously created for you by our legal professionals.

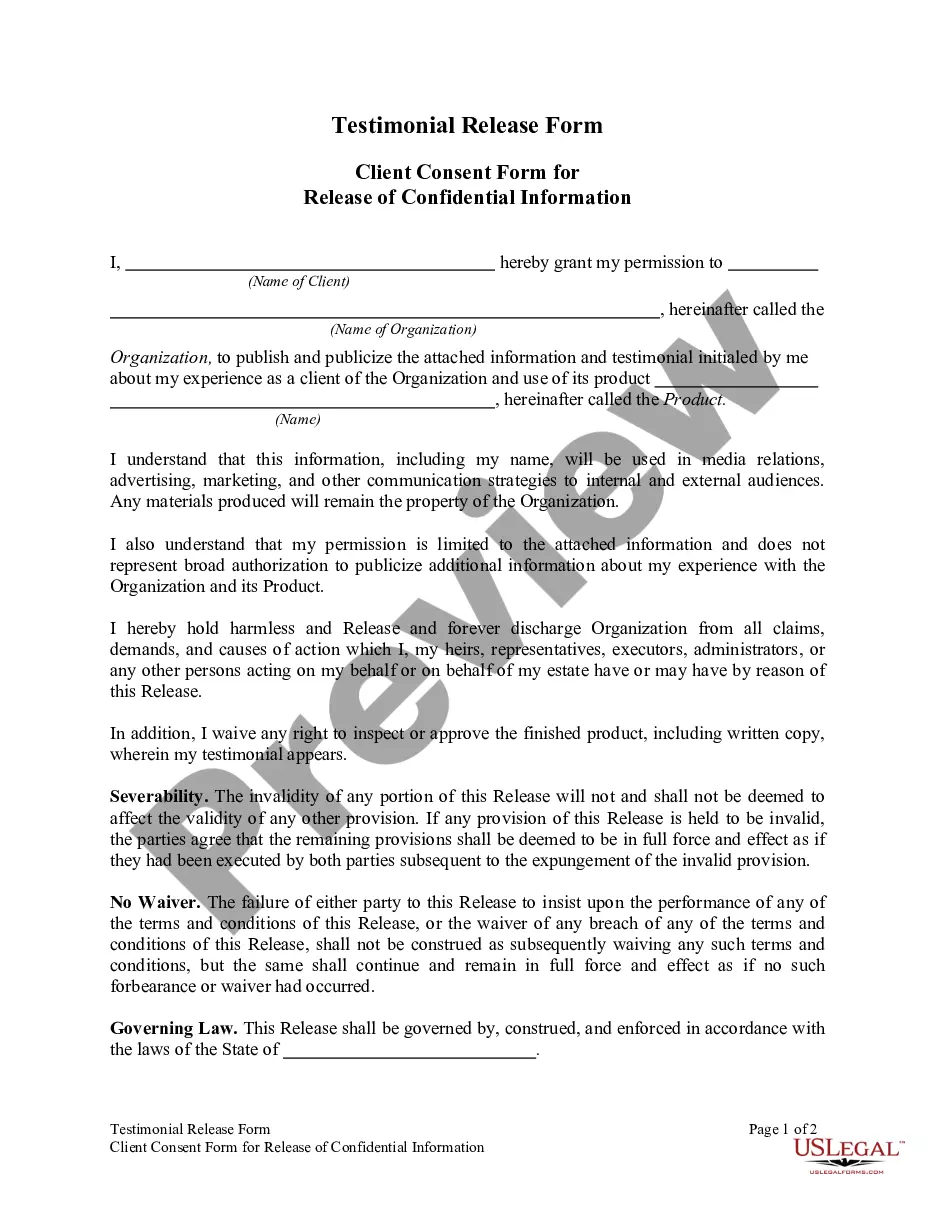

Review the form preview and descriptions to confirm you are on the correct form.

- Utilize our website whenever you need a dependable and trustworthy service to effortlessly find and download the Finding Fact Samples For Gradient Boosted Decision Trees.

- If you’re familiar with our services and have previously established an account with us, simply Log In, find the template and download it immediately or retrieve it later from the My documents section.

- Don’t possess an account? No worries. It only takes a few minutes to register and browse the collection.

- However, before directly downloading Finding Fact Samples For Gradient Boosted Decision Trees, consider these suggestions.

Form popularity

FAQ

XGBoost, or Extreme Gradient Boosting, is indeed based on decision trees. It enhances the gradient boosting framework by introducing system optimizations and a regularization component. This structured approach leads to better performance and greater speed compared to traditional gradient boosting models. To explore more about this subject, consider looking into finding fact samples for gradient boosted decision trees.

Yes, gradient boosting utilizes decision trees as its base learners. These trees are designed to capture complex patterns in the data. By sequentially adding trees, gradient boosting improves model performance while maintaining a focus on the mistakes made previously. This method demonstrates the versatility and strength of decision trees in various applications, including finding fact samples for gradient boosted decision trees.

Gradient boosting itself is not a decision tree, but rather a method that builds models using decision trees. It works by combining multiple weak learners, typically shallow decision trees, to form a stronger predictive model. Each tree corrects the errors of the previous ones, resulting in improved accuracy. For more clarity, consider finding fact samples for gradient boosted decision trees.

Preventing overfitting in gradient boosting requires careful tuning of hyperparameters. You can limit the number of estimators, set a maximum depth for the trees, or apply regularization techniques. Additionally, using techniques such as early stopping can also help. Seeking finding fact samples for gradient boosted decision trees may provide valuable insights into best practices.

Using a gradient boosting classifier involves fitting it to your data while specifying parameters such as learning rate and number of estimators. Start by preparing your data, then import the necessary libraries and create the classifier instance. Train the model with your dataset, and evaluate its performance on validation data. For effective guidance, finding fact samples for gradient boosted decision trees can enhance your understanding.

Gradient descent is not typically used in decision trees. Instead, decision trees follow a different mechanism for splitting nodes based on attribute values. However, in gradient boosted decision trees, gradient descent plays a crucial role during the boosting process. This approach helps optimize the model by minimizing the loss function effectively.

Gradient boosting handles missing data by creating surrogate splits during the tree-building process. This approach ensures that even if certain data points are missing, the algorithm can still make effective predictions by utilizing other available features. This flexibility makes gradient boosting robust to missing values in datasets. If you're seeking finding fact samples for gradient boosted decision trees, understanding how this method deals with missing data is crucial.

Gradient-boosted decision trees work by combining multiple decision trees sequentially to improve prediction. Each tree contributes to correcting the errors made by previous trees, enhancing the overall model accuracy. The technique effectively learns complex relationships within the data by focusing on hard-to-predict instances. If you are looking for finding fact samples for gradient boosted decision trees, this approach provides powerful insights.

The GradientBoostingRegressor is a specific implementation for regression tasks using gradient boosting. It calculates predictions through the addition of decision trees that correct errors from prior trees. Each tree is built based on the residuals, making the model adaptive to various patterns in the data. For those interested in finding fact samples for gradient boosted decision trees, this regressor can help improve predictive accuracy significantly.

A gradient boosted decision tree works by combining several decision trees to enhance predictive accuracy. Initially, a simple model is created, and subsequent trees are trained based on the residuals or errors of the previous trees. This method effectively focuses on hard-to-predict cases, ensuring that the model performs well overall. If you're in search of finding fact samples for gradient boosted decision trees, this method is extremely beneficial.